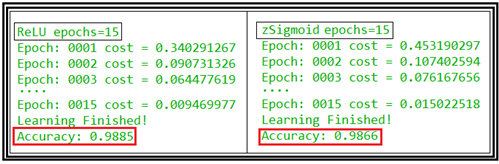

이미 Wide Deep 뉴럴 네트워크를 사용하는 XOR 문제에서 ReLU 함수 대신 zSigmoid(z)를 사용하여 거의 동일한 결과를 얻었으며 동일한 방법을 MNISYT CNN 코드에 적용해 보기로 한다. ReLU를 사용하는 MNIST CNN 의 인식율은 learning rate =0.001, 15 trainiing epochs에 98.85% 수준이다.

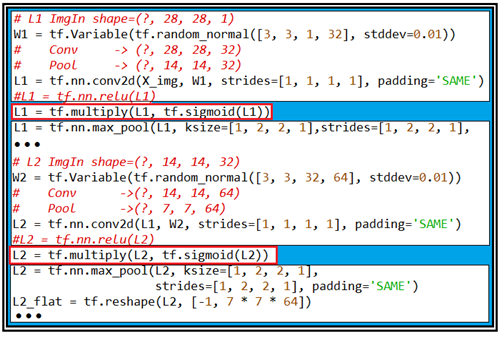

CNN 코드 구조에서 conv2d 필터링 단계와 max_pooling 단계 사이의 ReLU 함수 적용 위치에서 아래와 같이 코드를 수정하여 실행시켜보자.

아래 표에 의하면 실행 결과 그다지 차이가 없는 듯하다. ReLU 가 머신 러닝 전 분야에 걸쳐 폭 넓게 사용되고 있지만 만약에 ReLU 사용에 문제가 있다면 zSigmoid(z)를 비롯하여 다양한 대안이 있을 수 있음을 지적해 두자.

#mnist_cnn_zsigmoid_01.py

# MNIST and Convolutional Neural Network

import tensorflow as tf

import random

import numpy as np

from tensorflow.examples.tutorials.mnist import input_data

tf.set_random_seed(777) # reproducibility

mnist = input_data.read_data_sets("MNIST_data/", one_hot=True)

# parameters

learning_rate = 0.001

training_epochs = 15

batch_size = 100

# input place holders

X = tf.placeholder(tf.float32, [None, 784])

X_img = tf.reshape(X, [-1, 28, 28, 1]) # img 28x28x1 (black/white)

Y = tf.placeholder(tf.float32, [None, 10])

# L1 ImgIn shape=(?, 28, 28, 1)

W1 = tf.Variable(tf.random_normal([3, 3, 1, 32], stddev=0.01))

# Conv -> (?, 28, 28, 32)

# Pool -> (?, 14, 14, 32)

L1 = tf.nn.conv2d(X_img, W1, strides=[1, 1, 1, 1], padding='SAME')

#L1 = tf.nn.relu(L1)

L1 = tf.multiply(L1, tf.sigmoid(L1))

L1 = tf.nn.max_pool(L1, ksize=[1, 2, 2, 1],strides=[1, 2, 2, 1], padding='SAME')

'''

Tensor("Conv2D:0", shape=(?, 28, 28, 32), dtype=float32)

Tensor("Relu:0", shape=(?, 28, 28, 32), dtype=float32)

Tensor("MaxPool:0", shape=(?, 14, 14, 32), dtype=float32)

'''

# L2 ImgIn shape=(?, 14, 14, 32)

W2 = tf.Variable(tf.random_normal([3, 3, 32, 64], stddev=0.01))

# Conv ->(?, 14, 14, 64)

# Pool ->(?, 7, 7, 64)

L2 = tf.nn.conv2d(L1, W2, strides=[1, 1, 1, 1], padding='SAME')

#L2 = tf.nn.relu(L2)

L2 = tf.multiply(L2, tf.sigmoid(L2))

L2 = tf.nn.max_pool(L2, ksize=[1, 2, 2, 1],

strides=[1, 2, 2, 1], padding='SAME')

L2_flat = tf.reshape(L2, [-1, 7 * 7 * 64])

'''

Tensor("Conv2D_1:0", shape=(?, 14, 14, 64), dtype=float32)

Tensor("Relu_1:0", shape=(?, 14, 14, 64), dtype=float32)

Tensor("MaxPool_1:0", shape=(?, 7, 7, 64), dtype=float32)

Tensor("Reshape_1:0", shape=(?, 3136), dtype=float32)

'''

# Final FC 7x7x64 inputs -> 10 outputs

W3 = tf.get_variable("W3", shape=[7 * 7 * 64, 10], initializer=tf.contrib.layers.xavier_initializer())

b3 = tf.Variable(tf.random_normal([10]))

logits =( tf.matmul(L2_flat, W3) + b3)

# define cost/loss & optimizer

cost = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(

logits=logits, labels=Y))

optimizer = tf.train.AdamOptimizer(learning_rate=learning_rate).minimize(cost)

# initialize

sess = tf.Session()

sess.run(tf.global_variables_initializer())

# train my model

print('Learning started. It takes sometime.')

for epoch in range(training_epochs):

avg_cost = 0

total_batch = int(mnist.train.num_examples / batch_size)

for i in range(total_batch):

batch_xs, batch_ys = mnist.train.next_batch(batch_size)

feed_dict = {X: batch_xs, Y: batch_ys}

c, _ = sess.run([cost, optimizer], feed_dict=feed_dict)

avg_cost += c / total_batch

print('Epoch:', '%04d' % (epoch + 1), 'cost =', '{:.9f}'.format(avg_cost))

print('Learning Finished!')

# Test model and check accuracy

correct_prediction = tf.equal(tf.argmax(logits, 1), tf.argmax(Y, 1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

print('Accuracy:', sess.run(accuracy, feed_dict={

X: mnist.test.images, Y: mnist.test.labels}))

# Get one and predict

r = random.randint(0, mnist.test.num_examples - 1)

print("Label: ", sess.run(tf.argmax(mnist.test.labels[r:r + 1], 1)))

print("Prediction: ", sess.run(

tf.argmax(logits, 1), feed_dict={X: mnist.test.images[r:r + 1]}))

# plt.imshow(mnist.test.images[r:r + 1].

# reshape(28, 28), cmap='Greys', interpolation='nearest')

# plt.show()

'''

ReLU trainning epochs=15

Epoch: 0001 cost = 0.340291267

Epoch: 0002 cost = 0.090731326

Epoch: 0003 cost = 0.064477619

Epoch: 0004 cost = 0.050683064

Epoch: 0005 cost = 0.041864835

Epoch: 0006 cost = 0.035760704

Epoch: 0007 cost = 0.030572132

Epoch: 0008 cost = 0.026207981

Epoch: 0009 cost = 0.022622454

Epoch: 0010 cost = 0.019055919

Epoch: 0011 cost = 0.017758641

Epoch: 0012 cost = 0.014156652

Epoch: 0013 cost = 0.012397016

Epoch: 0014 cost = 0.010693789

Epoch: 0015 cost = 0.009469977

Learning Finished!

Accuracy: 0.9885

zSigmoid trainning epocks = 15

Epoch: 0001 cost = 0.453190297

Epoch: 0002 cost = 0.107402594

Epoch: 0003 cost = 0.076167656

....

Epoch: 0014 cost = 0.017381203

Epoch: 0015 cost = 0.015022518

Learning Finished!

Accuracy: 0.9866

zSigmoid trainning epocks = 20

Epoch: 0001 cost = 0.411341488

Epoch: 0002 cost = 0.101106419

Epoch: 0003 cost = 0.072218707

.....

Epoch: 0013 cost = 0.018847992

Epoch: 0014 cost = 0.015929094

Epoch: 0015 cost = 0.014185585

Epoch: 0016 cost = 0.012228203

Epoch: 0017 cost = 0.012414648

Epoch: 0018 cost = 0.010145885

Epoch: 0019 cost = 0.010030749

Epoch: 0020 cost = 0.007734325

Learning Finished!

Accuracy: 0.9875

zSig trainning epocks = 25

Epoch: 0001 cost = 0.411341488

Epoch: 0002 cost = 0.101106419

....

Epoch: 0023 cost = 0.005966733

Epoch: 0024 cost = 0.006369757

Epoch: 0025 cost = 0.005791829

Learning Finished!

Accuracy: 0.9897

zSig trainning epocks = 30

Epoch: 0001 cost = 0.411341488

Epoch: 0002 cost = 0.101106419

....

Epoch: 0028 cost = 0.002725583

Epoch: 0029 cost = 0.004054611

Epoch: 0030 cost = 0.004156343

Learning Finished!

Accuracy: 0.9891

'''